Privacy

By Evgeny Morozov

In 1967, The Public Interest, then a leading venue for high-brow policy debates, published a provocative essay by Paul Baran, one of the fathers of packet switching. Titled “The Future Computer Utility,” the essay speculated that the future of computing lay in the utility model, whereby a few centralized mega-computers would provide “information processing…the same way one now buys electricity.”

High costs, reasoned Baran, made it impractical for individual users to buy a computer. “It’s like buying a cow for a little cream for our coffee,” he quipped. In fact, even “the word ‘computer’ is by now a misnomer…it is the automation of information flow that is really at the heart of the computer revolution.” Thus, he wrote

…while it may seem odd to think of wanting to pipe computing power into homes, it may not be as far-fetched as it sounds… Our home computer console will be used to send and receive messages- like telegrams. We could check to see whether the local department store has the advertised sports shirt in stock in the desired color and size. We could ask when delivery would be guaranteed, if we ordered. The information would be up-to-the minute and accurate. We could pay our bills and compute our taxes via the console. We would ask questions and receive answers from “information banks”-automated versions of today’s libraries. We would obtain up-to-the-minute listing of all television and radio programs…The computer could, itself, send a message to remind us of an impending anniversary and save us from the disastrous consequences of forgetfulness.

By the mid-1970s the utility model was considered a failure. Expanding it nationwide was prohibitively expensive, and soon countercultural gurus like Steve Jobs could argue that it was actually cool to buy a cow to make your own cream for coffee. In 1967, Baran didn’t know this – nor could he imagine that cloud computing would eventually fulfill his vision. But he was prescient enough to worry that utility computing needed its own regulatory model. Here was an employee of RAND Corporation – hardly a bastion of Marxist thought – fretting about the concentration of market power in the hands of large computer utilities and demanding state intervention to avoid it. Baran also wanted policies that could “offer maximum protection to the preservation of the rights of privacy of information”:

Highly sensitive personal and important business information will be stored in many of the contemplated systems. Information will be exchanged over easy-to-tap telephone lines. At present, nothing more than trust – or, at best, a lack of technical sophistication – stands in the way of a would-be eavesdropper. All data flow over the lines of the commercial telephone system. Hanky-panky by an imaginative computer designer, operator, technician, communications worker, or programmer could have disastrous consequences. As time-shared computers come into wider use, and hold more sensitive information, these problems can only increase. Today we lack the mechanisms to insure adequate safeguards. Because of the difficulty in rebuilding complex systems to incorporate safeguards at a later date, it appears desirable to anticipate these problems.

All the privacy solutions you hear about

are on the wrong track.

To read Baran’s essay today is to realize that our contemporary “privacy problem” is not particularly contemporary. It’s not just a consequence of Mark Zuckerberg selling his soul (and our profiles) to the NSA. The problem was recognized early on – and little was done about it.

All of Baran’s envisioned uses for “utility computing”– minus, perhaps, “information banks” and taxes– are purely commercial. Ordering shirts, paying bills, looking for entertainment, conquering forgetfulness: this is not the Internet of “virtual communities,” “netizens,” and hippies. Baran simply imagined that networked computing would allow us to do things that we already do without networked computing: shopping, entertainment, research. But also: espionage, surveillance, voyeurism. His “computer revolution” sounds very un-revolutionary.

Baran’s is one of the many essays on the virtues of utility computing published at the time. Poring over those pieces today, it’s hard not to notice that one popular take on the contemporary situation – a narrative we can call “the Internet’s fall from grace” – is, at best, incomplete. It isn’t true that the Internet subverted the logics of capitalism and bureaucratic administration that had dominated world history for the last three centuries and carved out a unique and genuine space of dissent, and that it all began to unravel in the early 2000s, due to9/11 and the rise of Google, Facebook, social media, the Internet of Things, Big Data. Believing that to be true leads you to think that the task ahead is to restore this pristine and utopian space – about to be lost to marketers and spooks – by passing stricter laws, giving users more control, building better encryption tools.

While “the Internet’s fall from grace” hypothesis identifies some symptoms of the current malaise, it fails at both diagnosis and prescription. Its proponents have become so confused about politics and history, so committed to their own reading of what the Internet was, is, and should be, that they can neither decipher the origins of the crisis nor see a plausible way out of it. What they don’t see is that laws, markets, or technologies won’t save us. Something else is needed: politics.

///subhead: Worry less about the NSA and more about programs that don’t necessarily sound like surveillance.

Let’s address the symptoms first. Yes, the commercial interests of technology companies and the policy interests of government agencies have converged: both are interested in the collection and rapid-fire analysis of user data. Google and Facebook settled on advertising-based revenue models, so they are constantly propelled to collect ever more data to boost the effectiveness of their ads. Government agencies need the same data – they can either collect on their own or in cooperation with technology companies – to go on with their own programs.

Many of those programs deal with national security. But such data can also be used in ways that undermine privacy in less obvious ways. The Italian government, for example, has begun analyzing online receipts to identify tax cheaters who spend more than they claim as income. Once mobile payments fully replace cash transactions – with Google and Facebook as intermediaries – the data collected by these companies would be indispensable to tax collectors.

On another front, technocrats like Cass Sunstein, the former director of the Office of Information and Regulatory Affairs at the White House and a leading proponent of “nanny statecraft” —nudging people to do certain things—hope that the collection and instant analysis of data about individuals can help steer our behavior and help solve problems like obesity or climate change or drunk driving. A new book by three British academics– Changing Behaviours: On the Rise of the Psychological State – features a long list of such schemes at work in the UK, where the government’s nudging unit – inspired by Sunstein – has been so successful that it’s set to become a for-profit operation.

Equipped with Google Glass or a smartphone, citizens could be pinged whenever we are about to do something stupid, unhealthy or unsound. We don’t need to know what we are doing wrong: the system does the moral calculus on its own. Citizens take on the role of quiet information machines that feed the techno-bureaucratic complex with our data. And why wouldn’t we, if we are promised slimmer waistlines, cleaner air, longer (and safer) lives in return?

This logic of preemption is not different from that of the NSA in its fight against terror: let’s prevent problems rather than deal with their consequences. Even if we tie the hands of the NSA – by some combination of better oversight, stricter rules on data access, stronger and friendlier encryption technologies – the data hunger of other state institutions would remain. They will justify it all right: on issues like obesity or climate change – where we are facing a ticking bomb scenario – a little deficit of democracy can go a long way.

What kind of deficit? The new digital infrastructure, thriving as it does on real-time data contributed by citizens, allows the technocrats to take politics, with all its noise, friction, and discontent, out of the political process, replacing the messy stuff of coalition-building, bargaining, and deliberation with the clean and efficient veneer of data-powered administration. This phenomenon has a meme-friendly name – “algorithmic governance” – and it’s enthusiastically promoted by the usual suspects in Silicon Valley. In essence, information-rich democracies have reached a point where they can solve public problems without having to explain or justify themselves to citizens. Instead, they can simply appeal to our own self-interest – and they know enough about us to engineer a perfect, highly-personalized, irresistible nudge.

Here’s a postcard from a future where algorithmic governance runs supreme. Your smartphone or Google Glass shows you a prompt that convinces you to eat broccoli or exercise. Neither you nor the technocrat who sanctioned the prompt knows how exactly it was generated; you see, it’s all about proprietary algorithms, Big Data, and self-learning systems! As long as you eat your broccoli, no one feels the need to explain why this is a good way to solve problems either. It’s good for your health – so how can it be bad for society?

Note that as we are empowering individuals through self-knowledge, a public, highly contentious problem like “health care” is re-imagined as a purely private problem like “health.” Instead of enabling citizens to come together, clash, deliberate, and imagine an alternative health care system, we use feedback loops, sensors, and instant information analysis to reduce “health care” to a problem that each of us is supposed to address as an individual – within the institutional confines of the current system. So the system perpetuates itself, boosting its own efficiency, chiefly by demanding more and more perfection of its individual components – that is, us.

Privacy is not a goal itself.

It’s a means of achieving democracy.

Another postcard – but from the past. The year is 1985, and Spiros Simitis, Germany’s leading privacy scholar and practitioner – at the time, he serves as the privacy commissioner of the German land of Hesse—is addressing the University of Pennsylvania Law School. His lecture explores the very same issue – “the automation of information flow” – that preoccupied Baran. But Simitis doesn’t lose sight of the history of capitalism and democracy, so he sees recent technological changes in a far more ambiguous light.

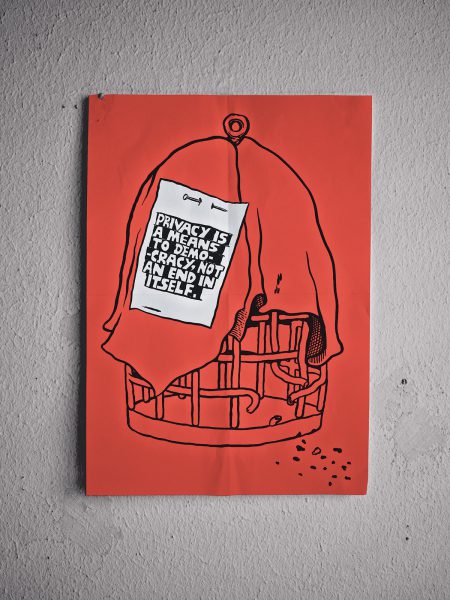

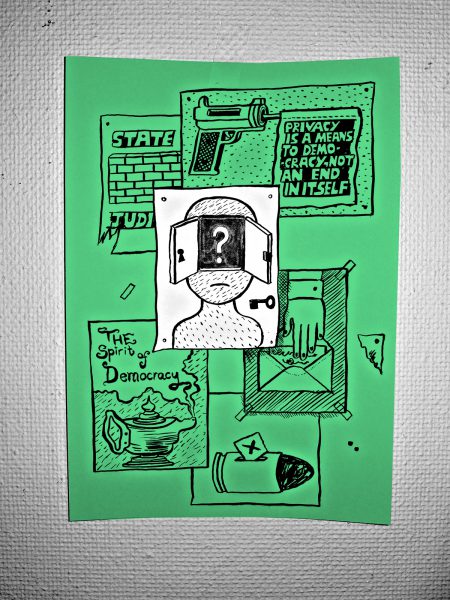

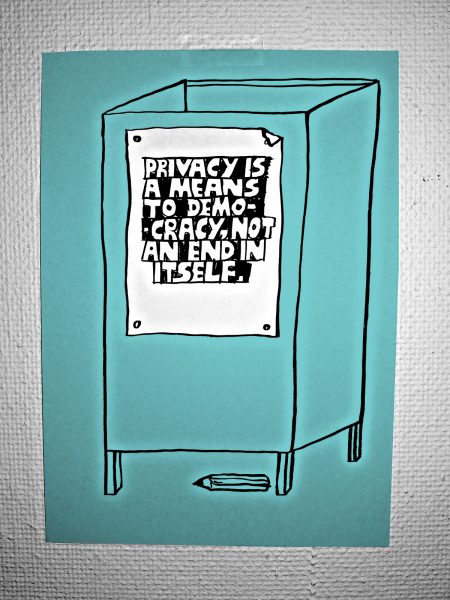

Simitis’s lecture reveals that our contemporary “privacy problem” is not just a consequence of the triumph of the Internet, with all the dreary and worn-out arguments that such is the cost of innovation, that we must make trade-offs. Without grounding the privacy problem in a robust historical narrative, we risk underestimating the enemy and the enormity of the task ahead. The roots of the problem have to do with how much risk, imperfection, improvisation and inefficiency we could tolerate in the name of keeping the democratic spirit alive. Simitis recognizes that privacy is not an end in itself – it’s a means of achieving a certain ideal of democratic politics, where citizens are trusted to be more than just self-content suppliers of information to all-seeing and all-optimizing technocrats. “Where privacy is dismantled,” he warns, “both the chance for personal assessment of the political…process and the opportunity to develop and maintain a particular style of life fade.” The “privacy problem” is a “democracy problem” in disguise.

Three technological trends underpin Simitis’s analysis. First, now that every sphere of social interaction is mediated by information technology– he warns of “the intensive retrieval of personal data of virtually every employee, taxpayer, patient, bank customer, welfare recipient, or car driver” – privacy is no longer a problem of some unlucky fellow caught off-guard in an awkward situation; rather, it’s everyone’s problem. Second, new technologies – Simitis mentions smart cards and videotex – not only make it possible “to record and reconstruct individual activities in minute detail” but also normalize surveillance, weaving it into our everyday life. Third, the personal information recorded via these new technologies allows social institutions to enforce standards of behaviour, triggering “long-term strategies of manipulation intended to mold and adjust individual conduct” of citizens.

Modern institutions certainly stand to gain from all this. Insurance companies can tailor cost-saving programs to the needs and demands of individual patients, hospitals and the pharmaceutical industry. Police can use newly available databases and various “mobility profiles” to identify potential criminals and locate current suspects. Welfare agencies can suddenly unearth fraudulent behavior.

But what about our roles as citizens? In case after case, Simitis discovers that we stand to lose. Instead of getting more context for decisions, we get less. Instead of more accurate and less Kafkaesque decision-making by bureaucratic systems, we get even more confusion as decision-making is automated and no one knows how exactly the algorithms work. Instead of getting a clearer picture of what makes our social institutions work, we, as citizens, get only more confused. Despite their promise for greater personalization and empowerment, the interactive systems only give us an illusion of more participation. As a result, “interactive systems … suggest individual activity where in fact no more than stereotyped reactions occur.”

If you think that Simitis was describing a future that never came to pass, consider a recent paper on the transparency of automated prediction systems by Tal Zarsky, one of the world’s leading experts on the ethics of data-mining. He notes that“data mining might point to individuals and events, indicating elevated risk, without telling us why they were selected.” As it happens, the degree of interpretability is one of the most consequential policy decisions to be made in designing data-mining systems. There are vast implications for democracy here:

a non-interpretable process might follow from a data-mining analysis which is not explainable in human language. Here, the software makes its selection decisions based upon multiple variables (even thousands). In these contexts, the role of the analyst is minimized. The lack of interpretation not only reflects on the role of the analysts, but also on the possible feedback to individuals affected by the data-mining process. It would be difficult for the government to provide a detailed response when asked why an individual was singled out to receive differentiated treatment by an automated recommendation system. The most the government could say is that this is what the algorithm found based on previous cases.

This is the future we are sleepwalking toward: Everything seems to work – and things might even be getting better – it’s just that we don’t exactly know why or how.

Too little privacy is dangerous. But so is too much privacy.

Simitis never says the magic words “Big Data” – there goes his invite to the TED Conference – but he still gets all the trends right. Free from dubious metaphysical assumptions about “the Internet age,” he actually arrives at a most original but cautious defense of privacy as a vital feature of a self-critical democracy. That defense deserves a close reading, for it shows why most of the contemporary responses to the privacy problem will fail unless they grasp how privacy can both enable and disable democracy – and not just the democracy of some abstract political theory set in ancient Greece – but of the messy, noisy democracy, with its never-ending contradictions, that we inhabit today.

Ever since the French revolution our response to changes in automated information processing has been to view them as a personal problem for the affected individuals. A case in point is the seminal article “The Right to Privacy” by Louis Brandeis and Samuel Warren. Writing in 1890, they sought a right to be left alone – to live an undisturbed life, away from paparazzi and intruders. According to Simitis, they expressed a desire, common to many self-made individuals at the time, “to enjoy, strictly for themselves and under conditions they determined, the fruits of their economic and social activity.”

A laudable goal; modern American capitalism wouldn’t have developed in its absence. But this right, disconnected from any matching responsibilities, could also sanction consumerist withdrawal, shielding us from the outside world, with its constant demands on our time and attention, undermining the foundations of the very democratic regime that has made this right possible. This is not a problem specific to the right to privacy. For some contemporary thinkers – like the French historian and philosopher Marcel Gauchet – democracies risk falling victims to their own success: having instituted a legal regime of rights that allow citizens to pursue their own private interests without any reference to what’s good for the public, they risk exhausting the very resource that has allowed them to flourish.

When everyone demands their rights but is unaware of their responsibilities, the political questions that have defined democratic life over centuries – How should we live together? What is in the public interest and how do I balance my own interest with that of the public? – are subsumed into legal, economic, or administrative domains. “The political” and “the public” no longer register as domains at all; laws, markets, and technologies displace debate and contestation as preferred, less messy solutions.

But a democracy without the participation of engaged citizens doesn’t sound much like a democracy – and might not even survive as one. This was obvious to Thomas Jefferson, who, while wanting every citizen to be “a participator in the government of affairs,” also believed democratic participation involves a constant tension between public and private life. A democracy that believes, as Simitis put it, that the citizen access to information “ends where the bourgeois’ claim for privacy begins“ won’t last as a well-functioning democracy. No, a democracy requires transparent and readily available data – but not only for the technocrats’ sake! This data is needed so that citizens can evaluate, form opinions and debate– and, occasionally, to fire the technocrats.

But if every citizen were to fully exercise their right to privacy, no such debate would be possible. Thus, the line between privacy and transparency is always in need of adjustment – even more so in times of rapid technological change. That balance itself is a deeply political issue, to be settled through public debate. As Simitis puts it, “far from being considered a constitutive element of a democratic society, privacy appears as a tolerated contradiction, the implications of which must be continuously reconsidered.”

In the last few decades, as we began to generate more data, our state institutions have become addicted to it. Remove the data, clog the feedback loops – and it’s not clear if they can function and think independently at all. We, as citizens, are caught in an odd position also: our reason for disclosing this data is not that we feel deep concern for the public good. No, we generate it – on Google or via self-tracking apps – out of self-interest. We are too cheap not to use free services subsidized by advertising. Or we want to sell our own data. Or we want to track our diet – and then sell the data.

This is how we arrive at the current situation where an idea like “algorithmic governance” becomes respectable. Its proponents assume that, once they replace citizens with feedback loops and once privacy becomes just a shibboleth for consumerist disengagement, the ensuring democratic deficit won’t be lethal. So they turn politics into “public administration” and put it in a data-intensive auto-pilot mode. Citizens can relax and enjoy themselves, only to be nudged, occasionally, whenever we refuse to eat our broccoli or fantasize about buying an SUV. What happens in between nudging – let alone how exactly those algorithms keep humming in the background – is presented as of little consequence. This new system doesn’t need any citizens – only our smartphones.

Privacy is too important to be regulated by laws or market forces alone.

Most of this was obvious to Simitis – back in 1985: Habits, activities, and preferences are compiled, registered, and retrieved to facilitate better adjustment, not to improve the individual’s capacity to act and to decide. Whatever the original incentive for computerization may have been, processing increasingly appears as the ideal means to adapt an individual to a predetermined standardized behavior that aims at the highest possible degree of compliance with the model patient, consumer, taxpayer, employee, or citizen… In short, the transparency achieved through automated processing creates possibly the best conditions for colonization of the individual’s lifeworld. Accurate, constantly updated knowledge of her personal history is systematically incorporated into policies that deliberately structure her behavior.

What Simitis is describing here is the rapid construction of what I call “invisible barbed wire” around the intellectual space that defines us as citizens and political subjects. Big Data, with its many interconnected databases that feed on information and algorithms of dubious provenance, imposes severe constrains on how we mature politically and socially. The German philosopher Jurgen Habermas was right to warn – back in 1963 – that “an exclusively technical civilization…is threatened…by the splitting of human beings into two classes – the social engineers and the inmates of closed social institutions.”

The invisible barbed wire of Big Data limits us to some space – which might even look quiet and enticing enough – which is not of our own choosing and that we cannot rebuild or expand. The worst part is that we do not see it as such. The barbed wire remains invisible because we believe that we are free to go anywhere. Worse, there’s no one to blame: certainly not Google, Dick Cheney or the National Security Agency. It’s the result of many different logics and systems – of modern capitalism, of bureaucratic governance, of risk management – that get supercharged by the automation of information processing and by the depoliticization of politics.

The more information we reveal about ourselves, the denser and more invisible this barbed wire becomes. We gradually lose our reason-giving capacity; we no longer understand why things happen to us – as a result, we cannot debate them. Is it still a democracy if citizens are only needed as micro-level venture capitalists who fund the continuing function of the system with their data but have no need to steer it?

But not all is lost. We could also learn to perceive ourselves within this barbed wire and even cut through it. Privacy is the very resource that allows us to do that, and, should we be so lucky, even plan our escape route. At the risk of mixing too many metaphors, the task for responsible democrats is to convert the space marked by the Invisible Barbed Wire into a Room of One’s Own – an intellectual place where we can take stock of the situation, reflect, and develop as thinking subjects.

This is where Simitis hits on a truly revolutionary insight that is lost in contemporary privacy debates: no progress would be achieved on this front as long as privacy protection is “more or less equated with extending an individual’s right to decide when and which data are to be accessible.” The trap that many well-meaning privacy advocates fall into is thinking that if only they could provide the individual with more control over their data – through stronger laws or a robust property regime – then the Invisible Barbed Wire would become visible. It won’t – not if that data is eventually returned to the very institutions we seek to reform.

Why did Germany’s leading privacy intellectual end up waging what looks like an argument against a right to privacy? Well, rather than wanting to protect the right to privacy for its own sake, Simitis views privacy and publicity as both ingredients and impediments to vibrant democratic life. The exact balance between the two should never be settled; nor is it to be readjusted based on some abstract theory. It’s a political question par excellence, never to be reduced to theoretical answers and always kept open for renegotiation.

Think of privacy in ethical terms.

If we accept privacy as a problem of and for democracy, then popular fixes for the “privacy problem” look inadequate. For example, in his new book Who Owns the Future?, Jaron Lanier proposes to ditch one pole of privacy – the legal one – and focus on the economic one instead. “Commercial rights are better suited for the multitude of quirky little situations that will come up in real life than new kinds of civil rights along the lines of digital privacy,” he writes. On this logic, by turning our data into an asset that we might sell, we accomplish two things. First, we can control who has access to it and, second, we can make up for some of the economic losses caused by the disruption of everything analog.

Lanier’s proposal is not very original. Back in Code and Other Laws in Cyberspace (first published in 1999), Lawrence Lessig made an equally passionate plea for building a property regime around private data. Lessig wanted to have a robot – “an electronic butler”– who could negotiate with the websites that he visits: “The user sets her preferences once – specifies how she would negotiate privacy and what she is willing to give up – and from that moment on, when she enters a site, the site and her machine negotiate. Only if the machines can agree will the site be able to obtain her personal data.”

It’s easy to see where such reasoning can take us. We’d all have customized smartphone apps that work much like automated trading systems on stock markets, always incorporating the latest data to update the price of our personal data portfolio and make the right trades. So, if you are walking by a fancy store selling jewelry, the store might be willing to pay more to know your spouse’s birthday than if you are sitting at home watching TV.

The property regime can, indeed, strengthen privacy: If consumers want a good return on their data portfolio, they need to ensure that their data is not already available elsewhere. Thus, they either “rent” it the way Netflix rents movies or they sell it on the condition that it can only be used or resold on tightly controlled conditions. Some companies already offer “data lockers” to facilitate such secure exchanges.

So if you want to defend the “right to privacy” for its own sake, you shouldn’t feel too worried. The likes of the NSA would probably still get what it wants, but that fear is different from the phobia that our private information might have become far too liquid and that we’ve lost control over its movements. A smart business model, coupled with a strong digital rights management regime, could fix that.

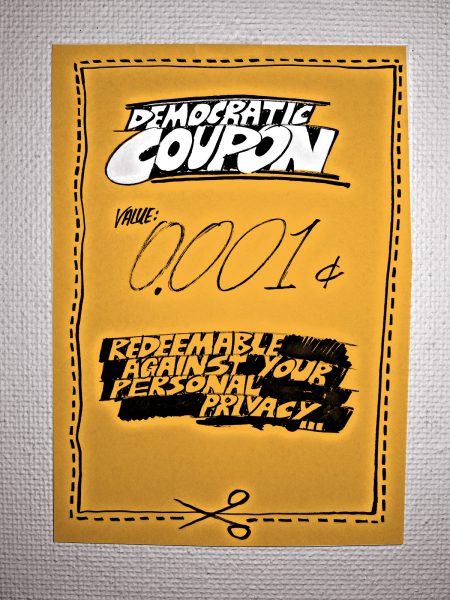

Meanwhile, government agencies committed to “nanny statecraft” will want this data as well. Perhaps, they might pay a small fee or promise a tax credit for the privilege to nudge you later on – with the help of the data from your smartphone. Consumers win, entrepreneurs win, technocrats win. Privacy, in one way or another, is preserved also. Who exactly loses here? If you’ve read your Simitis, you know the answer: democracy does.

Our decision to disclose personal information – even if we disclose it only to insurance companies – will inevitably have implications for other people, many of them far less well-off. Anyone who thinks that a decision to self-track is just a choice and we all can opt out must have little knowledge of how institutions think. Once there are enough early adopters who self-track – and most of the early adopters are likely to gain something from self-tracking – those who refuse would no longer be seen as just quirky individuals exercising their autonomy. No, they will be seen as deviants with something to hide. If we never lose sight of this fact, our decision to self-track won’t be as easy to reduce to pure economic self-interest – at some point, morality might kick in.

Do I really want to share my data and get a coupon that I do not need if it means that someone else who is already working five jobs would have to pay more? If our initial goal is to promote economic efficiency, such ethical concerns are beyond the point; we should delegate all decision-making to Lessig’s “electronic butlers.” But why should this be the case? That few of us have had moral pangs about such data-sharing schemes says little about the future. Before ecology became a global concern, few of us would think twice about taking public transport if we could drive. Before ethical consumption became a global concern, few of us would pay more for coffee that tastes the same but promises fair trade. Decisions that have previously resided solely in the economic domain can quickly spill into the ethical domain.

Consider a cheap t-shirt you see on eBay. It might be perfectly legal, but its “Made in Bangladesh” label makes us think twice about buying it. Perhaps, we fear that it was made by children or exploited adults. Or, having thought about it, we actually do want to buy the t-shirt because we hope that it might support the work of a child who would otherwise be forced into prostitution. What is the right thing to do here? We don’t know – so we do some research. Such scrutiny can’t apply to everything or we’d never leave the supermarket. But exchanges of information – the oxygen of democratic life – arguably fall into the category of “apply more thought, not less.” It’s not something to be negotiated by an “electronic butler” – not if we don’t want to cleanse our life of its political dimension.

Sabotage the system. Provoke more questions.

Reducing the privacy problem solely to the legal dimension is as troubling. The question that we’ve been asking for the last two decades – How can we make sure that we have more control over our personal information? – is not the right question to ask or at least it cannot be the only question to ask. Without learning and continuously relearning how automated information processing promotes and impedes democratic life, an answer to this question might prove worthless – especially if the democratic regime needed to implement whatever answer we come up with unravels in the meantime.

Intellectually at least, it’s clear what needs to be done: we must supplement the economic and legal dimensions with a political one, linking the future of privacy with the future of democracy in a way that refuses to reduce privacy either to markets or laws. First, we must politicize the debate about privacy and information-sharing. Articulating the existence – and the profound political consequences of the invisible barbed wire – would be a good start. We must scrutinize data-intensive problem-solving and expose its occasional anti-democratic character.

Second, we must learn how to sabotage the system – perhaps, by refusing to self-track at all. If refusing to track our calories or exercise routine is the only way to get our policy-makers to focus on solving problems at a higher level – rather than through nudging –“information boycotts” might be a reasonable option. Likewise, refusing to make money off your own data might be as political as refusing to drive a car or eat meat. Privacy can then reemerge as a deeply political instrument for keeping the spirit of radical democracy alive: we want private spaces because we still believe in our ability to reflect on what ails the world and actually find a way to fix it – and we’d rather not surrender this critical capacity to algorithms and feedback loops.

We should not abolish the right to privacy – we just need to balance it with a strong ethic. Otherwise, to keep saying that we all have a right to privacy as we keep surrounding ourselves with technological infrastructure that dispenses of us as citizens is akin to saying that we all have a right to clean air while also handing out coupons for free gas and heavily discounted SUVs.

Information needs an “inconvenient truth” moment – a moment where we would be made conscious of the fact that the current economic system has no way to correctly price the asset that is being traded simply because it has no idea what this asset is truly for. With climate change, at least the final scenario is easy to envision: melting ice caps, dead penguins, Bangladesh under water. It’s much harder to imagine the end of democratic public life – especially if won’t entail the collapse of the consumer economy, with Netflix, Spotify, and Facebook keeping us entertained. How exactly do we know that democracy is dead?

Third, we must embrace provocation in design. The more we can do to reawaken our own imagination to the open-ended nature and revisability of our technological and informational infrastructure – and the connection between this infrastructure and political life – the better. This means, amongst other things, that a lot of privacy-focused work in design is misguided, as it seeks to prompt users to make better decisions about what to do with their data – grounded in the paradigm of rights and property – and not to help them discover the political dimension to data sharing. We must use design to provoke – and not just to assist in decision-making.

Consider how, over the last few decades, we have become extremely sensitive to questions of race. Partly through education and partly through public debate, we’ve grasped that one can cause offense without ever using the N-word. We know racism when we see it. When it comes to privacy, the best we can expect from design is not to nudge citizens to guard or share their private information but to reveal the hidden political dimension to various acts of information-sharing – without telling us what it is that we must do. We don’t want an electronic butler – we want an electronic provocateur. With enough prompts, we might eventually discern such dimensions on our own, without any technological prompts. Instead of building yet another app that could tell us how much money we can save if only we start monitoring our exercise routine, we need an app that can tell us how many people are likely to lose health insurance if the insurance industry has as much data as the NSA, most of it contributed by consumers like us.

Finally, we have to abandon fixed preconceptions of how our digital services work and interconnect. Otherwise, we’ll fall victim to the same logic that severed the imagination of so many well-meaning privacy advocates who thought that defending the “right to privacy” – not the preservation of democracy – should drive public policy. While many Internet activists would surely argue otherwise, what happens to “the Internet” is of only secondary importance. Just like with privacy, it’s the fate of democracy itself that should be our primary goal.

After all, back in 1967, Paul Baran, one of the fathers of “the Internet,” was lucky enough not to know what this whole “Internet” thing actually is. This, perhaps, explains both the boldness of his vision and his acute awareness of the many dangers ahead. A little bit of self-imposed amnesia could liberate us.